Integral Probability Metric.

积分概率度量(integral probability metrics, IPM)用于衡量两个概率分布$p(x)$和$q(x)$之间的“距离” (相似性)。

对于两个概率分布$p(x)$和$q(x)$,可以通过矩(moment)来衡量其相似性,比如一阶矩(均值)或二阶矩(方差);然而相同的低阶矩也可能属于不同的概率分布,比如高斯分布和拉普拉斯分布可能具有相同的均值和方差。

IPM寻找满足某种限制条件的函数集合\(\mathcal{F}\)中的连续函数$f(\cdot)$,使得该函数能够提供足够多的关于矩的信息;然后寻找一个最优的\(f(x)\in \mathcal{F}\)使得两个概率分布$p(x)$和$q(x)$之间的差异最大,该最大差异即为两个分布之间的距离:

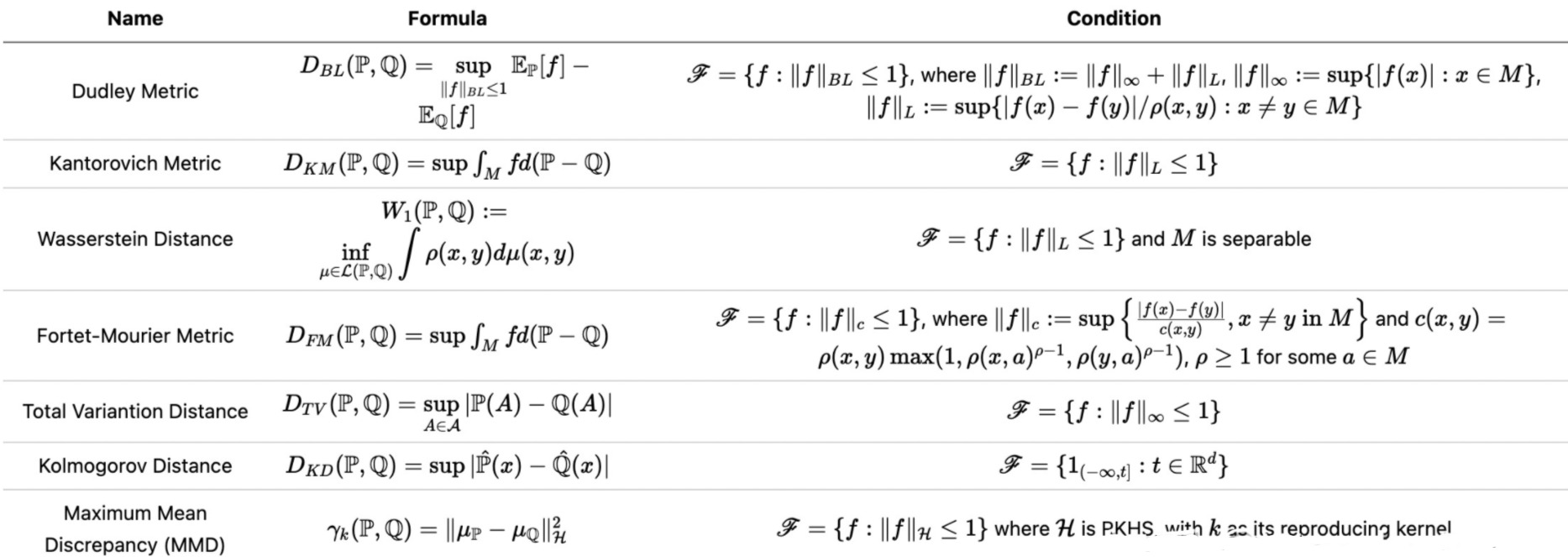

\[d_{\mathcal{F}}(p(x),q(x)) = \mathop{\sup}_{f(x)\in \mathcal{F}} \Bbb{E}_{x \text{~} p(x)}[f(x)]-\Bbb{E}_{x \text{~} q(x)}[f(x)]\]选择不同的函数空间\(\mathcal{F}\),会导致IPM具有不同的形式,下面给出一些常见的距离度量:

值得一提的是,尽管IPM定义的距离度量满足非负性、对称性、三角不等式,但当距离为$0$时并不能严格证明两个分布相等,因此IPM不属于严格的“距离”定义。

下面介绍几种常见的IPM及其性质:

- 总变差 Total Variation

- Wasserstein距离

- 均值和协方差特征匹配 Mean and Covariance Feature Matching

- 最大平均差异 Maximum Mean Discrepancy

- Fisher差异 Fisher Discrepancy

⚪ 总变差 (Total Variation)

总变差既是一种概率散度,又是一种积分概率度量。一般地,$p(x)$和$q(x)$之间的总变差定义为:

\[\begin{aligned} d_{\mathcal{F}}(p(x),q(x)) &= \int |p(x)-q(x)|dx \\ &= \int \mathop{\max}_{f(x) \in [-1,1]} p(x)f(x)-q(x)f(x)dx \\ &=\mathop{\max}_{f(x) \in [-1,1]} \mathbb{E}_{x \text{~}p(x)}[f(x)] -\mathbb{E}_{x \text{~}q(x)}[f(x)] \end{aligned}\]⚪ Wasserstein距离

若约束函数空间\(\mathcal{F}\)满足Lipschitz连续性$||f(x)||_L \leq 1$,则对应Wasserstein距离的对偶形式:

\[W[p(x),q(x)] = \mathop{\sup}_{f,||f||_L\leq 1} \Bbb{E}_{x \text{~} p(x)}[f(x)]-\Bbb{E}_{x \text{~} q(x)}[f(x)]\]⚪ 均值和协方差特征匹配

(1) 均值特征匹配 Mean Feature Matching

定义函数空间\(\mathcal{F}\)为如下形式:

\[\begin{aligned} \mathcal{F}_{v,w,p} = \{ & f(x) = <v,\Phi_w(x)>| \\ &v \in \Bbb{R}^m,||v||_p \leq 1,\\ &\Phi_w(x):\mathcal{X}\to \Bbb{R}^m,w \in \Omega \} \end{aligned}\]其中$v$是$p$范数不超过$1$的$m$维向量,$\Phi_w(\cdot)$是通过$w$参数化的神经网络。则对应的IPM距离为:

\[\begin{aligned} d_{\mathcal{F}}(p(x),q(x)) &= \mathop{\sup}_{f \in \mathcal{F}_{v,w,p}} \Bbb{E}_{x \text{~} p(x)}[f(x)]-\Bbb{E}_{x \text{~} q(x)}[f(x)] \\ &= \mathop{\max}_{w \in \Omega,v,||v||_p \leq 1} <v,\Bbb{E}_{x \text{~} p(x)}[\Phi_w(x)]-\Bbb{E}_{x \text{~} q(x)}[\Phi_w(x)]> \\ &= \mathop{\max}_{w \in \Omega} [\mathop{\max}_{v,||v||_p \leq 1} <v,\Bbb{E}_{x \text{~} p(x)}[\Phi_w(x)]-\Bbb{E}_{x \text{~} q(x)}[\Phi_w(x)]>] \\ &= \mathop{\max}_{w \in \Omega} ||\Bbb{E}_{x \text{~} p(x)}[\Phi_w(x)]-\Bbb{E}_{x \text{~} q(x)}[\Phi_w(x)]||_p \end{aligned}\]上述IPM距离旨在寻找一个最优映射$\Phi_w(\cdot)$使得两个分布映射到$\Phi_w(\cdot)$的特征空间后,其均值的差异最大,对应的最大均值差异即为两个分布之间的距离。

(2) 协方差特征匹配 Covariance Feature Matching

定义函数空间\(\mathcal{F}\)为如下形式:

\[\begin{aligned} \mathcal{F}_{U,V,w} = \{ &f(x) = <U^T\Phi_w(x),V^T\Phi_w(x)>| \\ &U,V \in \Bbb{R}^{m\times k},U^TU=I_k,V^TV=I_k, \\ &\Phi_w(x):\mathcal{X}\to \Bbb{R}^m,w \in \Omega \} \end{aligned}\]其中$\Phi_w(\cdot)$是通过$w$参数化的神经网络。则对应的IPM距离为:

\[\begin{aligned} d_{\mathcal{F}}(p(x),q(x)) &= \mathop{\sup}_{f \in \mathcal{F}_{U,V,w}} \Bbb{E}_{x \text{~} p(x)}[f(x)]-\Bbb{E}_{x \text{~} q(x)}[f(x)] \\ &= \mathop{\max}_{w \in \Omega,U^TU=I_k,V^TV=I_k} U^T<\Bbb{E}_{x \text{~} p(x)}[\Phi_w(x)]-\Bbb{E}_{x \text{~} q(x)}[\Phi_w(x)]),\Bbb{E}_{x \text{~} p(x)}[\Phi_w(x)]-\Bbb{E}_{x \text{~} q(x)}[\Phi_w(x)]>V \\ &= \mathop{\max}_{w \in \Omega,U^TU=I_k,V^TV=I_k} \text{Tr}[U^T(\Bbb{E}_{x \text{~} p(x)}[\Phi_w(x)\Phi^T_w(x)]-\Bbb{E}_{x \text{~} q(x)}[\Phi_w(x)\Phi^T_w(x)])V] \\ &= \mathop{\max}_{w \in \Omega} ||\Bbb{E}_{x \text{~} p(x)}[\Phi_w(x)\Phi^T_w(x)]-\Bbb{E}_{x \text{~} q(x)}[\Phi_w(x)\Phi^T_w(x)]||_{*} \end{aligned}\]上述IPM距离旨在寻找一个最优映射$\Phi_w(\cdot)$使得两个分布映射到$\Phi_w(\cdot)$的特征空间后,其协方差的差异最大。协方差的差异通过核范数(奇异值的和)衡量。

(3) 均值和协方差特征匹配

若同时考虑均值和协方差两个统计特征,则可定义IPM距离如下:

\[\begin{aligned} d_{\mathcal{F}}(p(x),q(x)) = \mathop{\max}_{w \in \Omega} & ||\Bbb{E}_{x \text{~} p(x)}[\Phi_w(x)]-\Bbb{E}_{x \text{~} q(x)}[\Phi_w(x)]||_p \\ & + ||\Bbb{E}_{x \text{~} p(x)}[\Phi_w(x)\Phi^T_w(x)]-\Bbb{E}_{x \text{~} q(x)}[\Phi_w(x)\Phi^T_w(x)]||_{*} \end{aligned}\]⚪ 最大平均差异

最大平均差异(maximum mean discrepancy, MMD)没有直接定义函数空间\(\mathcal{F}\),而是通过核方法在内积空间中间接定义了连续函数$f(\cdot)$,使得函数$f(\cdot)$能够隐式地计算所有阶次的统计量。

MMD将函数$f(\cdot)$限制在再生希尔伯特空间(reproducing kernel Hilbert space, RKHS)的单位球内:$||f||_{\mathcal{H}} \leq 1$。MMD定义如下:

\[\text{MMD}(f,p(x),q(x)) = \mathop{\sup}_{||f||_{\mathcal{H}} \leq 1} \Bbb{E}_{x \text{~} p(x)}[f(x)]-\Bbb{E}_{x \text{~} q(x)}[f(x)]\]根据Riesz表示定理,希尔伯特空间中的函数$f(x)$可以表示为内积形式:

\[f(x) = <f,\phi(x)>_{\mathcal{H}}\]则MMD可以写作:

\[\begin{aligned} \text{MMD}(f,p(x),q(x)) &= \mathop{\sup}_{||f||_{\mathcal{H}} \leq 1} <f,\Bbb{E}_{x \text{~} p(x)}[\phi(x)]>_{\mathcal{H}} -<f,\Bbb{E}_{x \text{~} q(x)}[\phi(x)]>_{\mathcal{H}} \\ &= \mathop{\sup}_{||f||_{\mathcal{H}} \leq 1} <f,\Bbb{E}_{x \text{~} p(x)}[\phi(x)]-\Bbb{E}_{x \text{~} q(x)}[\phi(x)]>_{\mathcal{H}} \end{aligned}\]MMD的平方可以写作:

\[\begin{aligned} \text{MMD}^2(f,p(x),q(x)) &= (\mathop{\sup}_{||f||_{\mathcal{H}} \leq 1} <f,\Bbb{E}_{x \text{~} p(x)}[\phi(x)]-\Bbb{E}_{x \text{~} q(x)}[\phi(x)]>_{\mathcal{H}})^2 \\ &= ||\Bbb{E}_{x \text{~} p(x)}[\phi(x)]-\Bbb{E}_{x \text{~} q(x)}[\phi(x)]||_{\mathcal{H}}^2 \end{aligned}\]其中上式应用了两个向量内积的最大值必定在两向量同方向上取得,即当\(f=\Bbb{E}_{x \text{~} p(x)}[\phi(x)]-\Bbb{E}_{x \text{~} q(x)}[\phi(x)]\)时取得最大值。

对期望的计算应用蒙特卡洛估计,可得:

\[\begin{aligned} \text{MMD}^2(f,p(x),q(x)) &= ||\Bbb{E}_{x \text{~} p(x)}[\phi(x)]-\Bbb{E}_{x \text{~} q(x)}[\phi(x)]||_{\mathcal{H}}^2 \\ &≈ ||\frac{1}{m}\sum_{i=1}^m\phi(x_i)-\frac{1}{n}\sum_{j=1}^n\phi(x_j)||_{\mathcal{H}}^2 \end{aligned}\]引入核函数 $\kappa(x,x’)=<\phi(x),\phi(x’)>_{\mathcal{H}}$,则MMD的平方近似计算为:

\[\begin{aligned} \text{MMD}^2(f,p(x),q(x)) = & \Bbb{E}_{x,x' \text{~} p(x)} [\kappa(x,x')] + \Bbb{E}_{x,x' \text{~} q(x)} [\kappa(x,x')] \\ & -2\Bbb{E}_{x \text{~} p(x),x' \text{~} q(x)} [\kappa(x,x')] \\ ≈ &\frac{1}{m^2}\sum_{i=1}^m\sum_{i'=1}^m \kappa(x_i,x_{i'})+\frac{1}{n^2}\sum_{j=1}^n\sum_{j'=1}^n \kappa(x_j,x_{j'}) \\ & - \frac{2}{mn}\sum_{i=1}^m\sum_{j=1}^n \kappa(x_i,x_j) \end{aligned}\]def compute_mmd(self, px: Tensor, qx: Tensor) -> Tensor:

m, n = px.shape[0], qx.shape[0]

px_kernel = compute_kernel(px, px)

qx_kernel = compute_kernel(qx, qx)

pq_kernel = compute_kernel(px, qx)

mmd = px_kernel.mean() +qx_kernel.mean() - \

2 * pq_kernel.mean()

return mmd

其中正定核$\kappa(x,x’)$可以选择不同的形式,如RBF核 \(\kappa(x,x') = e^{-\frac{\|x-x'\|^2}{\sigma}}\)。

⚪ Fisher差异

- paper:Fisher GAN

Fisher差异(Fisher discrepancy)是一种受线性判别分析启发的IPM距离。

线性判别分析是指把样本集$x$进行投影$w^Tx$,从而让相同类别的样本投影尽可能接近(即类内方差$w^T(\Sigma_0+\Sigma_1)w$尽可能小)、不同类别的样本投影尽可能远离(即类间距离$w^T(\mu_0-\mu_1)(\mu_0-\mu_1)^Tw$尽可能大)。

类似地,Fisher差异不仅最大化两种概率分布的均值差异,还约束了概率分布的二阶矩。定义函数空间\(\mathcal{F}\)为如下形式:

\[\begin{aligned} \mathcal{F} = \{ f(x) : \frac{1}{2}(\Bbb{E}_{x \text{~} p(x)}[f^2(x)]+\Bbb{E}_{x \text{~} q(x)}[f^2(x)])=1 \} \end{aligned}\]则对应的IPM距离为:

\[\begin{aligned} d_{\mathcal{F}}(p(x),q(x)) &= \mathop{\sup}_{f \in \mathcal{F}} \Bbb{E}_{x \text{~} p(x)}[f(x)]-\Bbb{E}_{x \text{~} q(x)}[f(x)] \\ &= \mathop{\sup}_{f,\frac{1}{2}(\Bbb{E}_{x \text{~} p(x)}[f^2(x)]+\Bbb{E}_{x \text{~} q(x)}[f^2(x)])=1} \Bbb{E}_{x \text{~} p(x)}[f(x)]-\Bbb{E}_{x \text{~} q(x)}[f(x)] \\ &= \mathop{\sup}_{f} \frac{\Bbb{E}_{x \text{~} p(x)}[f(x)]-\Bbb{E}_{x \text{~} q(x)}[f(x)]}{\sqrt{\frac{1}{2}(\Bbb{E}_{x \text{~} p(x)}[f^2(x)]+\Bbb{E}_{x \text{~} q(x)}[f^2(x)])}} \end{aligned}\]