PointNet:用于3D点集分类与分割的深度学习模型.

点云(point cloud)是一种无规律性(irregular)的几何数据结构。本文设计了一种可以直接处理点云的神经网络PointNet,PointNet的输入是点云,输出是整个输入的类别标签或者每个输入点的分割或者分块的标签。考虑到输入点云序列不变性的特征,使用一个简单的对称函数(max-pooling)编码无序的点云全局特征。

点云是一组3D点的集合,每一个点最基本应包含坐标$(x,y,z)$,还可以包含颜色,法向量等特征。它具有如下三大主要特性:

- 无序性(Unordered):与图像中的像素数组或者体素网格中的体素数组不同,点云是一系列无序点的集合。神经网络在处理$N$个3D点时,应该对$N!$种输入顺序具有不变性。

- 点之间的相互关系(Interaction Among Points):点集中的大部分点不是孤立的,邻域点一定属于一个有意义的子集。因此,网络需要从近邻点学习到局部结构,以及局部结构的相互关系。

- 变换不变性(Invariance Under Transformations):作为一个几何目标,学习的点特征应该对特定的变换具有不变性。比如旋转和平移变换不会改变全局点云的类别以及每个点的分割结果。

PointNet在处理上述三个特点时采用以下设计:

- 无序性:使用对输入集合的顺序不敏感的对称函数,比如最大池化。

- 交互性:对称函数的聚合操作能够得到全局信息,将点的特征向量与全局特征向量连接起来,就可以让每个点感知到全局的语义信息。

- 变换不变性:对输入做一个标准化操作,比如使用网络训练一个变换矩阵。

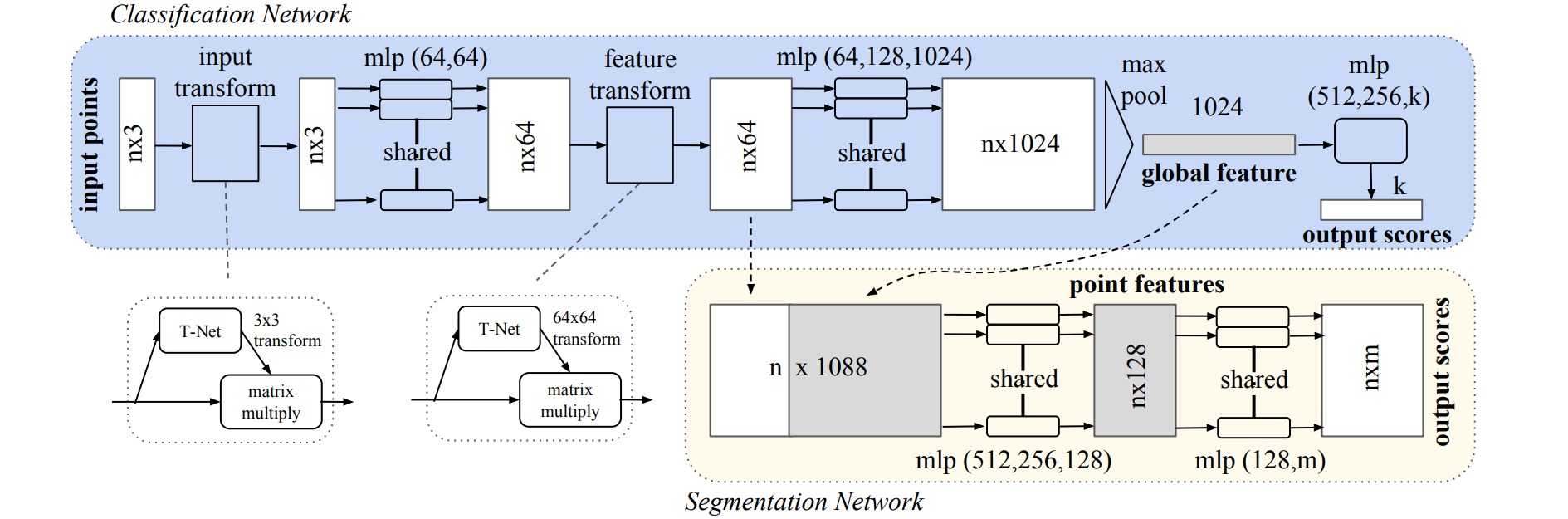

PointNet的网络结构如下:

⚪ 分类网络

分类网络的输入是$n$个三维坐标,通过T-Net预测一个变换矩阵对输入进行变换(矩阵乘法),然后使用MLP对每个点做特征嵌入,之后在特征空间中预测一个变换矩阵对特征进行变换,然后使用MLP再做一次特征增强,最后通过最大池化得到全局特征,并用MLP做分类预测。

⚪ 分割网络

相比分类,分割需要每个点捕捉全局信息后才能知道自己是哪一类,于是把每个点的特征和全局特征进行连接,然后使用MLP做特征增强和分类预测。

⚪ PointNet的Pytorch实现

用于构造变换矩阵的T-Net实现如下:

class STN(nn.Module):

def __init__(self, k):

super(STN, self).__init__()

self.conv1 = torch.nn.Conv1d(k, 64, 1)

self.conv2 = torch.nn.Conv1d(64, 128, 1)

self.conv3 = torch.nn.Conv1d(128, 1024, 1)

self.fc1 = nn.Linear(1024, 512)

self.fc2 = nn.Linear(512, 256)

self.fc3 = nn.Linear(256, k * k)

self.relu = nn.ReLU()

self.bn1 = nn.BatchNorm1d(64)

self.bn2 = nn.BatchNorm1d(128)

self.bn3 = nn.BatchNorm1d(1024)

self.bn4 = nn.BatchNorm1d(512)

self.bn5 = nn.BatchNorm1d(256)

self.k=k

def forward(self, x):

"""

:param x: size[batch,channel(feature_dim),L(length of signal sequence)]

"""

batchsize = x.size()[0]

x = self.relu(self.bn1(self.conv1(x)))

x = self.relu(self.bn2(self.conv2(x)))

x = self.relu(self.bn3(self.conv3(x)))

x = torch.max(x, 2, keepdim=True)[0]

x = x.view(-1, 1024)

x = self.relu4(self.bn4(self.fc1(x)))

x = self.relu5(self.bn5(self.fc2(x)))

x = self.fc3(x)

iden = torch.tensor(torch.from_numpy(np.eye(self.k).flatten().astype(np.float32))).view(1, self.k**2).repeat(

batchsize, 1)

if x.is_cuda:

iden = iden.cuda()

x = x + iden

x = x.view(-1, self.k, self.k)

return x

如果在网络中引入了T-Net,则希望T-Net生成的变换矩阵尽量接近旋转矩阵(即正交矩阵),会使优化过程更稳定。因此额外引入正交性损失:

\[L_{reg} = ||I-AA^T||_F^2\]def feature_transform_reguliarzer(trans):

"""

:param trans: Rotation Matrix

"""

d = trans.size()[1]

I = torch.eye(d)[None, :, :]

if trans.is_cuda:

I = I.cuda()

#网络得到的旋转矩阵应该尽量正交,并以正交性作为损失

loss = torch.mean(torch.norm(torch.bmm(trans, trans.transpose(2, 1)) - I, dim=(1, 2)))

return loss

PointNet中用于提取点云特征的部分实现如下:

class PointNetEncoder(nn.Module):

def __init__(self, channel, transform=False):

"""

:param channel: input channel

:param transform: if use STN

"""

super(PointNetEncoder, self).__init__()

if transform:

self.STN = STN(k=channel)

self.STN_feature = STN(k=64)

self.conv1 = nn.Conv1d(channel,64,1)

self.conv2 = nn.Conv1d(64,64,1)

self.conv3 = nn.Conv1d(64,64,1)

self.conv4 = nn.Conv1d(64,128,1)

self.conv5 = nn.Conv1d(128,1024,1)

self.bn1 = nn.BatchNorm1d(64)

self.bn2 = nn.BatchNorm1d(64)

self.bn3 = nn.BatchNorm1d(64)

self.bn4 = nn.BatchNorm1d(128)

self.bn5 = nn.BatchNorm1d(1024)

self.relu = nn.ReLU()

self.transform=transform

def forward(self, x, global_feature=True):

# global_feature: True:return global_feature False:return cat(points_feature,global_feature)

N = x.shape[2]

if self.transform:

tran1 = self.STN(x)

x = x.transpose(2, 1)

x = torch.bmm(x, tran1)

x = x.transpose(2, 1)

x = self.relu(self.bn1(self.conv1(x)))

x = self.relu(self.bn2(self.conv2(x)))

if self.transform:

tran2 = self.STN_feature(x)

x = x.transpose(2, 1)

x = torch.bmm(x, tran2)

x = x.transpose(2, 1)

if self.transform:

tran_mat = (tran1,tran2)

else:

tran_mat = None

point_wise_feature=x

x = self.relu(self.bn3(self.conv3(x)))

x = self.relu(self.bn4(self.conv4(x)))

x = self.relu(self.bn5(self.conv5(x)))

global_feature_vec = torch.max(x, 2, keepdim=True)[0]

global_feature_vec = global_feature_vec.view(-1,1024)

if global_feature:

return global_feature_vec, tran_mat

else:

temp = global_feature_vec.unsqueeze(2).repeat(1,1,N)

return torch.cat([point_wise_feature,temp],dim=1), tran_mat

PointNet的分类网络实现如下:

class PointNet_cls(nn.Module):

def __init__(self, cls=40, normal_channel=False, transform=False):

super(PointNet_cls, self).__init__()

if normal_channel:

channel = 6

else:

channel = 3

self.feat = PointNetEncoder(channel=channel,transform=transform)

self.fc1 = nn.Linear(1024, 512)

self.fc2 = nn.Linear(512, 256)

self.fc3 = nn.Linear(256, cls)

self.dropout = nn.Dropout(p=0.3)

self.bn1 = nn.BatchNorm1d(512)

self.bn2 = nn.BatchNorm1d(256)

self.relu = nn.ReLU()

self.log_softmax=nn.LogSoftmax(dim=1)

def forward(self, x):

x, trans_mat = self.feat(x)

x = self.relu(self.bn1(self.fc1(x)))

x = self.relu(self.bn2(self.dropout(self.fc2(x))))

x = self.fc3(x)

x = self.log_softmax(x)

return x, trans_mat