PiT:重新思考视觉Transformer的空间维度.

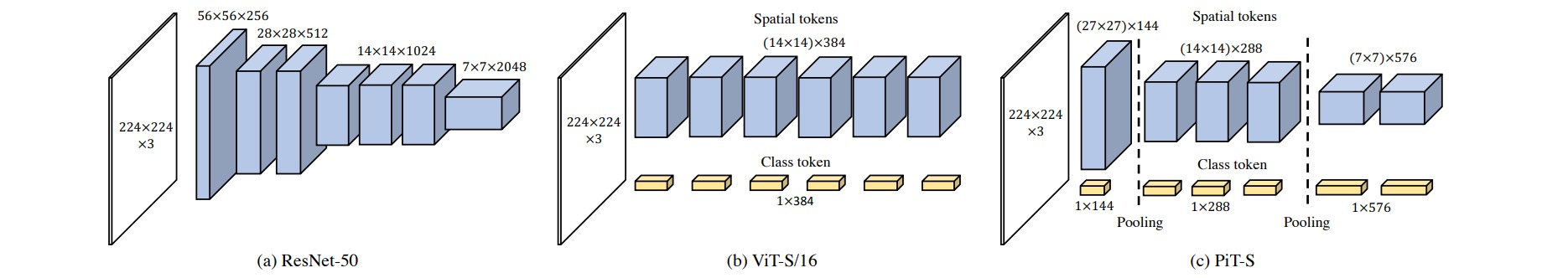

池化pooling是CNN中的一个重要组件,从CNN成功的设计原理出发,本文作者研究了空间尺寸转换的作用及其在基于Transformer的体系结构上的有效性。作者特别遵守CNN的降维原则;随着深度的增加,传统的CNN会增加通道尺寸并减小空间尺寸。从经验上表明,这种空间尺寸的减小也有利于Transformer架构,并在原始ViT模型的基础上提出了一种新颖的基于池化的视觉Transformer(PiT)。

池化层与每一层的感受野大小密切相关。 一些研究表明,池化层有助于提高网络的表现力和泛化性能。为了将池化层的优势扩展到ViT,作者提出了基于池化的视觉Transformer (PiT)。 PiT是结合池化层的Transformer 体系结构。大多数卷积神经网络都有池化层,这些池化层在减小空间尺寸的同时增加通道维数。使用PiT模型可以验证池化层是否像ResNet中一样为ViT带来优势。

视觉Transformer会基于自注意力而不是卷积操作来执行特征提取。 在自注意力机制中,所有位置之间的相似性用于空间交互。为ViT设计的池化层如下图所示。由于ViT以2D矩阵而不是3D张量的形式处理特征,因此池化层应将空间token分离并将其重塑为具有空间结构的3D张量。在reshape之后,通过深度卷积来执行空间大小的减小和通道的增加。并且将返回reshape2D矩阵,用于后续Transformer blocks的计算。

深度卷积旨在以最少的操作来利用少量参数进行空间尺寸的减小和通道尺寸的增大。在ViT中,存在与空间结构不对应的部分CLS token,对于这部分使用一个附加的全连接层来调整通道大小以匹配patch token。

class DepthWiseConv2d(nn.Module):

def __init__(self, dim_in, dim_out, kernel_size, padding, stride, bias = True):

super().__init__()

self.net = nn.Sequential(

nn.Conv2d(dim_in, dim_out, kernel_size = kernel_size, padding = padding, groups = dim_in, stride = stride, bias = bias),

nn.Conv2d(dim_out, dim_out, kernel_size = 1, bias = bias)

)

def forward(self, x):

return self.net(x)

class Pool(nn.Module):

def __init__(self, dim):

super().__init__()

self.downsample = DepthWiseConv2d(dim, dim * 2, kernel_size = 3, stride = 2, padding = 1)

self.cls_ff = nn.Linear(dim, dim * 2)

def forward(self, x):

cls_token, tokens = x[:, :1], x[:, 1:]

cls_token = self.cls_ff(cls_token)

tokens = rearrange(tokens, 'b (h w) c -> b c h w', h = int(sqrt(tokens.shape[1])))

tokens = self.downsample(tokens)

tokens = rearrange(tokens, 'b c h w -> b (h w) c')

return torch.cat((cls_token, tokens), dim = 1)

PiT的完整实现可参考vit-pytorch。

class PiT(nn.Module):

def __init__(

self,

*,

image_size,

patch_size,

num_classes,

dim,

depth,

heads,

mlp_dim,

dim_head = 64,

dropout = 0.,

emb_dropout = 0.,

channels = 3

):

super().__init__()

assert image_size % patch_size == 0, 'Image dimensions must be divisible by the patch size.'

assert isinstance(depth, tuple), 'depth must be a tuple of integers, specifying the number of blocks before each downsizing'

heads = cast_tuple(heads, len(depth))

patch_dim = channels * patch_size ** 2

self.to_patch_embedding = nn.Sequential(

nn.Unfold(kernel_size = patch_size, stride = patch_size // 2),

Rearrange('b c n -> b n c'),

nn.Linear(patch_dim, dim)

)

output_size = conv_output_size(image_size, patch_size, patch_size // 2)

num_patches = output_size ** 2

self.pos_embedding = nn.Parameter(torch.randn(1, num_patches + 1, dim))

self.cls_token = nn.Parameter(torch.randn(1, 1, dim))

self.dropout = nn.Dropout(emb_dropout)

layers = []

for ind, (layer_depth, layer_heads) in enumerate(zip(depth, heads)):

not_last = ind < (len(depth) - 1)

layers.append(Transformer(dim, layer_depth, layer_heads, dim_head, mlp_dim, dropout))

if not_last:

layers.append(Pool(dim))

dim *= 2

self.layers = nn.Sequential(*layers)

self.mlp_head = nn.Sequential(

nn.LayerNorm(dim),

nn.Linear(dim, num_classes)

)

def forward(self, img):

x = self.to_patch_embedding(img)

b, n, _ = x.shape

cls_tokens = repeat(self.cls_token, '() n d -> b n d', b = b)

x = torch.cat((cls_tokens, x), dim=1)

x += self.pos_embedding[:, :n+1]

x = self.dropout(x)

x = self.layers(x)

return self.mlp_head(x[:, 0])